I don’t need to look into a crystal ball to believe that many aspects of our lives will, to some extent, become AI-assisted, if not entirely AI-driven. It is beyond dispute that the era of AI has arrived, and that AI will fundamentally reshape our future.

But for AI to be a positive game-changer, safety is an essential consideration. As Premier Li Qiang put it at the recent World Economic Forum annual meeting: “Like other technologies, AI is a double-edged sword. If it is applied well, it can do good and bring opportunities to the progress of human civilisation and provide great impetus to the industrial and scientific revolution.”

Managing the risks of AI is no easy task. Organisations may find it challenging to grapple with the regulatory landscape, given the complexity and novelty of AI technology.

Organisations in Hong Kong that develop, customise or use AI systems that involve personal data are duty-bound to comply with the Personal Data (Privacy) Ordinance. The application of the ordinance, as a piece of technology-neutral legislation, is not affected by the technology employed. In other words, there is no lacuna. The ordinance applies equally to the handling of personal data by AI.

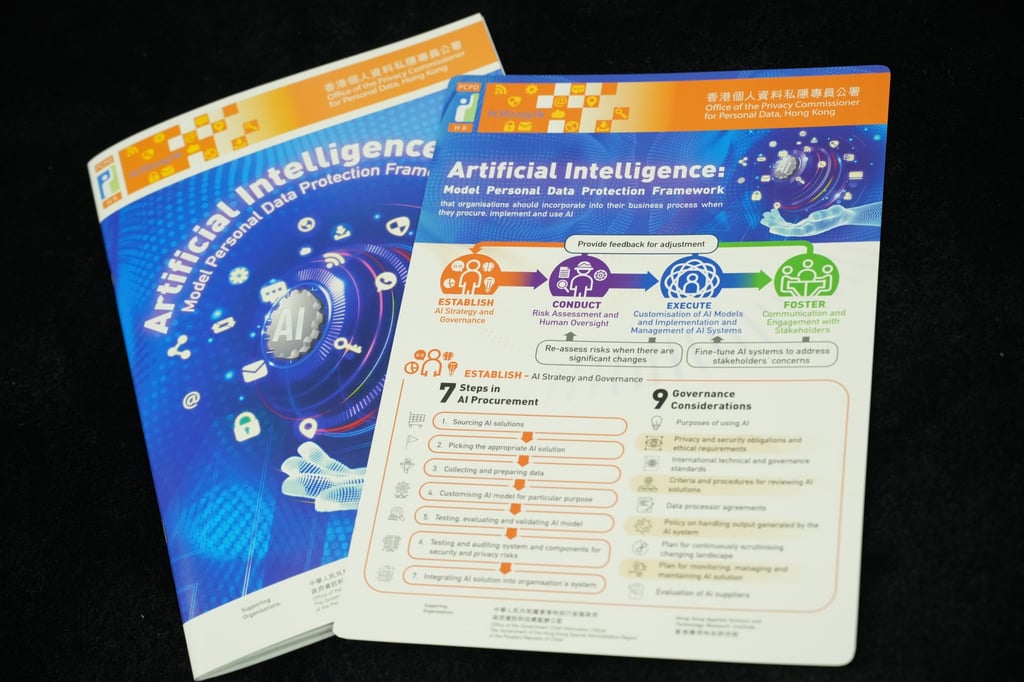

This model framework covers recommended measures for four general business processes: establishing AI strategy and governance, conducting risk assessment and human oversight, customising AI models and implementing and managing AI systems, as well as communicating and engaging with stakeholders.

Indeed, the framework provides a step-by-step guide on the considerations and measures to be taken throughout the life cycle of AI procurement, implementation and use, which would materially reduce the need for organisations to seek external advice from system developers, contractors or even professional service providers.

Moreover, in line with international practice, the framework recommends that organisations adopt a risk-based approach, implementing risk management measures that are commensurate with the risks posed, including an appropriate level of human oversight. This effectively enables organisations to save costs by focusing their resources on the oversight of higher-risk AI applications.

Thus, the model framework has been introduced to facilitate the implementation and use of AI in a safe and cost-effective manner rather than inhibiting its use.

AI is set to transform almost every facet of our lives. Whether AI becomes a game-changer for better or for worse hinges on our actions today. By fostering the safe and responsible use of AI, together we can build a trustworthy AI-driven world.

Ada Chung Lai-ling is Hong Kong’s Privacy Commissioner for Personal Data